The Engine Core

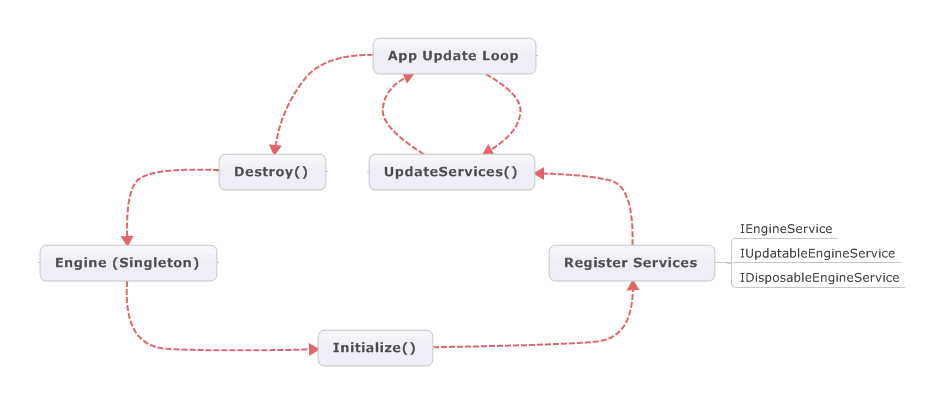

Before I dive into the graphics system, I feel it’s important to talk a bit about the general organization of the engine core, which the render system is only one component of. It’s the most important component of course, but it fits snugly into an overall design that componentizes each system and allows for flexibility in adding new functionality and new implementations.

The gist of the engine hasn’t changed all that much from the previous design. There’s still a root “Engine” object, and organization still revolves around the concept of service managers. It’s just the general design has been refined and expanded on a bit more. Also, all of these objects/interfaces are now in the “Tesla” interface, as “Tesla.Core” longer exists.

The engine object is now a singleton, rather than a static class. It’s the only singleton I hope to have in the overall design, and that’s only to serve as a central root to query services from. The only thing an engine instance has is its EngineServiceRegistry, which is the container for services from which those are queried/registered from. There are still static methods to initialize and destroy the engine, as well as static events to notify interested parties.

An engine service is nothing more than a manager – an entry point into some component that handles core functionality. Graphics, input, windowing, etc, all those pieces of functionality are their own independent components. If a component relies on another, then it can query that component by its interface from the engine root object. So the design decouples these various systems, and encourages not to use singletons for manager objects. It also makes creating implementations easy and straight forward, since they’re interface based.

And of course, this design allows for the engine to add new services without any effort, to grow and expand in its functionality. Developers can also utilize this scheme to add their own services that may be application specific. Something like the XNA concept of “game services”.

The previous design did not specify a single engine interface, any interface could be registered to the service registry. Thus the biggest change is that all services are codified into three interfaces:

- IEngineService

- IUpdatableEngineService

- IDisposableEngineService

The last two should be fairly obvious (Dispose() and Update() methods). Each engine service has a name as well as an initialize method that takes in the engine object. The service registry also has events for when a service is added, removed, or changed. There is a notion of being able to “hot swap” an active service with a different implementation. Theoretically, with how the graphic resources are designed, individual graphic objects can also be “hot swapped” too, although I don’t have that much interest in implementing that (for reasons with shaders, which would get too dicey as far as content is concerned). At best this would be an automatic content unload-load cycle that gets kicked off, from say a user option getting changed.

A diagram charting the life-cycle of the engine is below:

Updatable Engine Services

Services that require a notification to update themselves was born from a design change with the input system. In the previous design of the engine, mouse/keyboard services when queried for their state would poll the device (usually with a P/Invoke call, or for raw input, the current state based on what events have been received). I didn’t quite like that design much, and wanted to opt for a single point in your loop for which you’d poll the input device once, then use that state for the duration of your update.

The engine has a static method called UpdateServices() that calls into the service manager, and unsurprisingly loops through the relevant services.

The Service Registry

So some additional notes on the registry that contains all the engine services. It also implements the interface IServiceProvider. The registry can also be used with the ContentManager in order to query services when ISavable objects are read from an input stream. The biggest use case is for graphic resources to be able to get a hold of a IRenderSystem so it can construct its implementation (more on this later in the post).

Also, I have a good example of the ability to “hot swap” that I mentioned earlier. It’s done internally where the input system is concerned. Despite the input services, the engine still has static “Mouse” and “Keyboard” classes to easily query device state. These are really only for convenience as all they do is wrap the engine services. Their static constructors query for the engine object, and if it’s valid, asks for the corresponding service, if it exists. They also hook up engine events, so if the service is changed, the static wrapper is notified of the change.

Platform Initializers

When the engine is initialized, it is service-less (a departure from the old design, where an engine had to be initialized with a render system). There is an overload initialize method that takes an IPlatformInitializer object. The idea behind this is to be able to group services into a single “platform” and use that to initialize the engine with (register services). And being able to group services together would lend itself to plugin or configuration file capabilities, to automatically initialize the engine with your services.

Types of Services

I briefly mentioned some of these earlier in the post. A list of the current and future services are:

- IRenderSystem – Graphics, duh!

- IMouseInputSystem – Handles device creation and querying state (no more mouse wrapper)

- IKeyboardInputSystem – Handles device creation and querying state (no more keyboard wrapper)

- IWindowSystem – Handles creation of windows to render to.

- ILoggingSystem – Handles a logging mechanism to log info, warn, and error messages.

- IAudoSystem – No implementation yet, it’s on the wish list…for a very long time.

Also notice that the naming scheme has changed. The usage of “Provider” has been dropped.

The Render System

Drum roll please! Like the rest of the engine core, the render system isn’t a radical redesign, instead it’s more a refinement. Still, there are plenty of changes to the design. What hasn’t changed is the usage of the Bridge pattern where there is still a concept of an “abstraction” object that has an “implementation”.

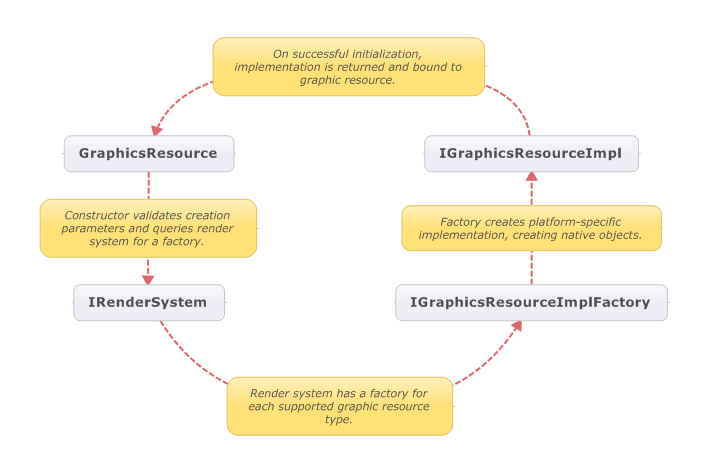

The graphics system is made up of many objects – buffers, effects, textures. Each of these objects subclasses the GraphicsResource base class and has an implementation that inherits from the interface IGraphicsResourceImpl. This is a departure somewhat from the previous engine design, where the implementations were all abstract classes. Now we have a lot more object types (including texture arrays!) that refining the implementation abstraction made it too cumbersome for the actual implementation. This is mostly due to single inheritance in C#, so having them as interfaces and moving validation up a level made a lot more sense to reduce redundancies on the platform side.

The design also has shifted where for each graphics resource type, there is a corresponding implementation interface. In the old design, for example, both RenderTarget2D and Texture2D shared the same abstract implementation object, now there is an ITexture2DImpl and an IRenderTarget2DImpl (that inherits the previous interface).

And the magic that binds these two objects together is another interface, an IGraphicsResourceImplFactory. The IRenderSystem is a container for implementation factories, rather than being the object to create the implementation like in the old design. When a graphics resource is created, it queries the render system for the appropriate factory, which then creates the implementation. Hopefully you can see two good features of this setup:

- We can add more functionality to the render system, over time, since it’s completely factory based.

- Each graphics resource can be treated as a “feature”. A render system can support all, or only a subset of the engine’s graphic resources. For example, textures are universally supported, but maybe texture arrays are not. Since a texture array is a separate object from a texture, its implementation factory would not be registered to the render system. Therefore that “feature” would not be available.

So, say we have a vertex buffer (with some vertex declaration, and capacity for 20 vertices), we create it like so:

VertexBuffer vb = new VertexBuffer(renderSystem, vertexDecl, 20, ResourceUsage.Static);

Notice, that constructing graphic objects now requires the render system. This, like all the other resource types, kicks off a creation process that is illustrated with the diagram below:

The only reason why the implementation objects were abstract classes and not interfaces in the previous engine design was for input creation validation. Now all of that is moved to the primary graphics object (e.g. VertexBuffer rather than VertexBufferImplementation). Validation is at the “top-level” of the graphics system, rather than the implementation, in order to enforce consistency between all implementations. Once creation passes validation, the implementation only has to worry about creating the native objects. If a render system does not support a graphics feature, then a graphics exception is thrown in the constructor of the graphics resource.

A list of graphic objects that are presently in the engine core:

- Texture1D, Texture2D, Texture3D, TextureCube

- Texture1DArray, Texture2DArray

- RenderTarget2D, RendertargetCube

- VertexBuffer, StreamOutputBuffer, IndexBuffer

- SwapChain, OcclusionQuery, Effect

- RasterizerState, SamplerState, BlendState, DepthStencilState

Each of these objects have a corresponding IXXXImpl and IXXXImplFactory interface.

Now, this organization also allows for features that may only be specific to certain platforms. For example, WPF support is (presently) a windows-only feature that would exist as an graphic object-implementation-factory trio in Tesla.Windows. Another example, is an API-specific feature such as Compute Shaders and UAVs. That’s (so far) only a Direct3D11 feature. It’s something I hope to support in the future!

How will the engine support it you ask? Resource creation will obviously be supported in the same manner as I’ve already outlined, but we also need run-time support for using the objects. That’s where Render Extensions come into play. But that’s for another post! ![]()

There are still plenty of topics to go over in the future with the render system, details that are out of scope for this general overview. But they should make for more interesting posts, as I’m trying to strike a balance between the old API (which supported XNA – I do want to keep that, and go with MonoGame support) and a newer API that supports Direct3D11 with SharpDX. It’s a tricky balancing act that has been fraught with lots of thought and frustration on how to achieve API harmony. The API design has gotten to the point where I’m happy with it just about, and confident to start talking about it.

And of course, if I had more time, this would already be live by now (the code I mean) – oh well! See you next time!

Leave a Reply